By Frederic Bourget

In a recent blog post, we discussed how improved trust and understanding of the capabilities of AI-powered Active Learning solutions is leading to increased use of the technology in the legal industry.

Instead of changing the way attorneys practice to fit the technology into their workflow, they are initially just leveraging the additional information that Active Learning delivers in the background to help validate and defend their processes. It’s only when they find gaps or ways to gain efficiencies that they seek to optimize their eDiscovery workflow to incorporate the technology.

Trust in Active Learning, however, often comes gradually, as the tool develops increased accuracy when users “teach” it how to return more relevant documents and accurate assumptions.

In this post, I’ll discuss IPRO’s approach to Active Learning and how our solutions offer various features to help you leverage the technology at your own pace, increase its accuracy on your cases, and eventually improve efficiency of your eDiscovery processes.

Enabling Active Learning by Default

IPRO enables Active Learning in the background by default to help users become accustomed to the technology without disrupting their workflow. After the creation of your review pass, and the identification of the tags you plan to use for surfacing a relevant document, the Active Learning process begins.

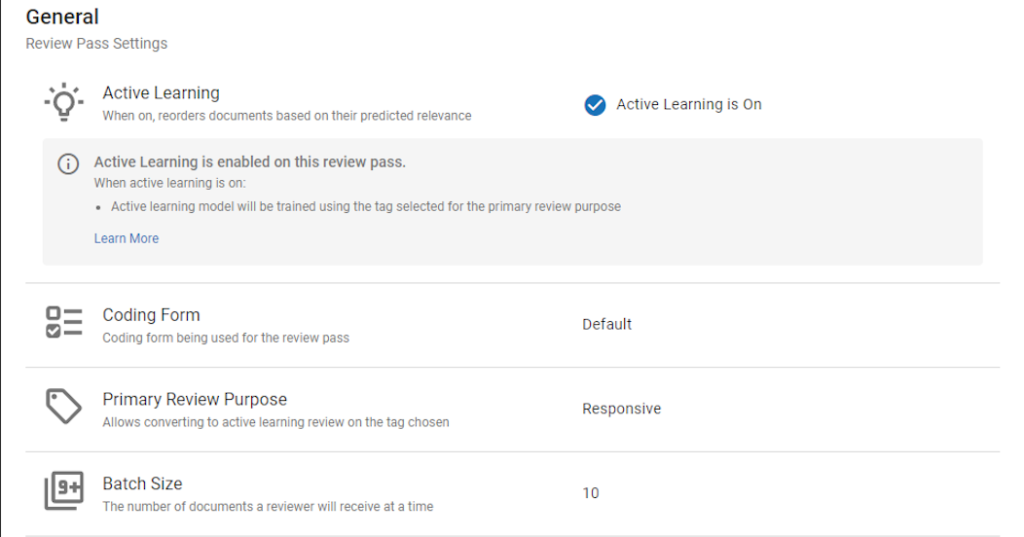

Figure 1: Review Pass Settings with Active Learning on.

If you prefer to turn off Active Learning functionality in the background, it can be turned off across your entire environment. At some point in the review, the setting could be set Active Learning enabled to start prioritizing predicted positive documents within the review pass. Enabled review passes will still run transparent to reviewers but it would change the document order for reviewers.

When enabled, Active Learning is automatically trained using previously selected tags for the primary review purpose. As documents are loaded in a case, the technology analyzes them to identify whether they have enough good quality text to make relevancy predictions. At the onset of review, documents are strategically pulled from the clusters and presented to the reviewer for tagging.

Combining Manual and Active Learning Reviews

On by default, IPRO’s Active Learning is meant to just accompany your standard processes. You will know that it’s enabled on any particular review pass you’re working.

With Active Learning, reviewers are continually presented with documents the technology predicted as relevant, in a prioritized manner, allowing them to review and tag documents much quicker to increase accuracy. As the review continues, relevant documents are prioritized and ordered by the highest relevancy ranking.

IPRO’s Active Learning actually improves from the work done by reviewers. If the Primary Review Purpose tag is applied to a document, then the system will learn from that document. If the document is marked “Reviewed” and the Primary Review Purpose is applied, then that document is recorded as a “positive”. If the document is marked “Reviewed” and the Primary Review Purpose is not applied, then that document is recorded as a “negative”.

Once you reach a point of exhausting the highly ranked documents, the tool’s algorithm will shift as relevant documents start tapering off and decrease, only to be replaced by non-relevant documents. Then as you begin to receive mostly non-relevant documents, you can decide to stop reviewing.

If you decide not to, the following tools can still help you increase quality.

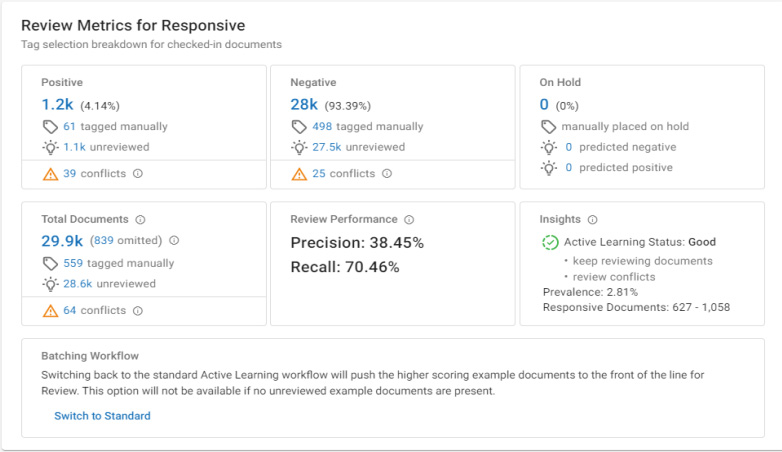

Figure 2: High Level Review dashboard with manual available and review conflicts – blue items are clickable.

Validating AI-powered eDiscovery processes

When you’re determined it’s reasonable to suspend review after achieving a reasonable recall with Active Learning, you still may need to provide more defensibility to your newly improved review process. IPRO enables you to perform this validation of our Active Learning results through sampling and additional quality control processes.

Conflicts are bound to happen with Active Learning, especially as the algorithm is receiving more and more training input and refining its predictions. These happen when the technology’s algorithm predicted a document one way, but a reviewer tagged it the other way. You can see and click those as shown in Figure 1.

Quality control batches can be created as a part of an Active Learning enabled review pass in IPRO solutions. But only records that are part of the original review pass can be added to a quality control batch.

These batches can be used for almost any purpose, but most often are used for: Reviewing Conflicts, Coding Quality Review Relevancy Score, or Quality Control late-stage elusion testing.

Conclusion

Quality control is an essential part of validating manual and AI-powered review to help optimize your eDiscovery processes.

IPRO offers analytical dashboards to help reviewers further validate their processes and resolve conflicts.

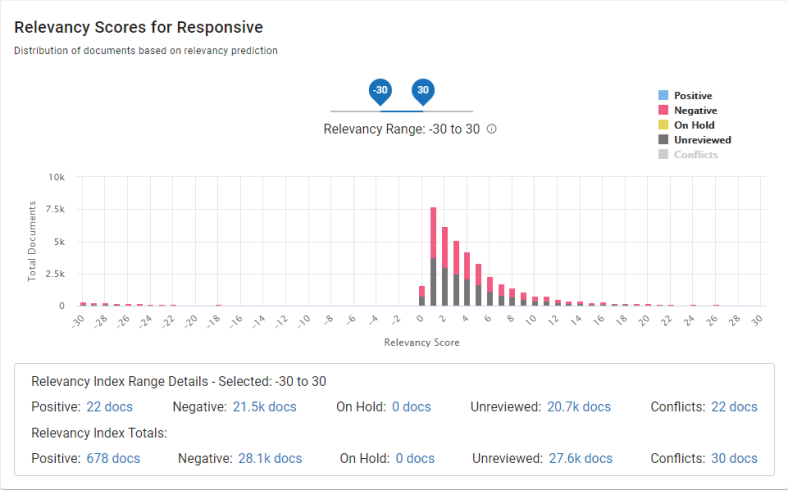

For example, our relevancy graph offers scores for responsive documents in a case, with a listing of unreviewed docs and conflicts, as an embedded quality control feature so you can see how your reviewers are performing. And our Desired Recall graph that shows for various numbers of documents the estimated number of available desired recall points for recall levels.

Figure 3: Relevancy scores

We also offer graphs of estimated predicted relevant documents for AL review, tag selection, and other insights. Users also can view a chart of review progress for responsive for manually reviewed check in documents by date.

Learn more about IPRO solutions for Active Learning.